As requested by a reader, our Egghead to English™ Translation Service, has translated the paper Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning into the vernacular so you have a hope of understanding what they are saying.

Bakerloo: What the hell are they going on about in this paper?

Alex: Imagine you run a big photo-sorting service.

Millions of pictures arrive every day: cats, cars, trees, people, food. You want a computer system that can look at each image and say, “That’s a cat,” or “That’s a bicycle,” without a human labeling them.

Around 2015–2016, this problem—getting computers to recognize what’s in images—was a major frontier. Lots of groups were building “deep learning” systems: huge stacks of simple pattern-recognizing steps that, together, become very good at spotting shapes, textures, and objects.

Two designs were leading the race:

- One family called Inception (also known as GoogLeNet), which looked at each picture in several ways at once—small details, bigger patterns, even bigger patterns—and then combined those views.

- Another family called ResNet, which added a simple trick: instead of forcing each layer to invent something totally new, it let each layer say, “Take what we already know, plus this small correction.” That small change made very deep systems much easier to train.

The paper you’re reading asks a straightforward question:

“What if we combine these two ideas—Inception’s clever multi-view blocks and ResNet’s ‘small corrections’ trick? Do we get a system that learns faster and performs better?”

The authors build several versions of this hybrid and compare them to a cleaned-up “pure” Inception design on a very big, standard test called ImageNet (a huge collection of labeled photos used as a benchmark).

They find three main things:

- When they add the ResNet-style “small corrections” on top of Inception blocks, the system learns much faster. It reaches good performance in fewer training steps.

- The final accuracy is a bit better, but not dramatically—this is evolution, not magic. The value is mostly in training speed and stability.

- They hit a practical problem: if the “correction” part is too strong, the whole system can become unstable and stop learning. The fix is surprisingly simple: turn the correction down—scale it by a small factor before adding it. That’s enough to keep things calm while still getting the benefit.

Finally, when they combine several of these models and average their opinions, they reach record-breaking performance on the benchmark of the time.

So, for a person who knows nothing about the application domain, the paper is about this:

- How to design a large, complex pattern-recognition system so that:

- It can be very deep and powerful,

- It still learns reliably, and

- It uses its “improvement voice” gently enough not to crash itself.

In the larger story of AI, this paper is one step in a long engineering effort: figuring out how to stack lots of simple parts into a big system that sees the world more clearly—without falling apart under its own complexity.

Bakerloo: Can you give me more specifics, and perhaps a glossary?

Alex: Sure.

Here’s an Egghead → English™ pass on the paper — not a line-by-line translation, but a faithful, plain-language walkthrough of what it’s actually saying and doing.

1. What problem are they trying to solve?

Around 2015–2016, two big families of image-recognition networks were hot:

- Inception (a.k.a. GoogLeNet) – clever, highly engineered building blocks with multiple branches at each layer.

- ResNet – simpler blocks, but with skip connections (“residual connections”) that help very deep networks learn without getting stuck.

Both did great on ImageNet, but in different ways.

The paper asks:

“What if we take the fancy ‘Inception’ blocks and wire them together using ResNet-style skip connections? Do we get the best of both worlds?”

And behind that:

- Does adding residual connections to Inception make training faster?

- Does it improve final accuracy?

- Are there any stability problems?

- Can we design a “clean” new Inception (v4) in the process?

2. Quick refresher: What are Inception and ResNet in human terms?

Inception, in plain language

In a normal CNN, each layer is like one type of filter applied uniformly.

In an Inception block, each layer is more like:

“Let’s look at the image with several kinds of filters in parallel — small, medium, large — plus a shortcut path. Then we stitch all those results together.”

So an Inception block:

- Has multiple parallel paths (1×1, 3×3, 5×5-ish convolutions).

- Uses tricks to keep computation manageable (like breaking 5×5 into two 3×3s, factorizing 3×3 into 1×3 then 3×1, etc.).

- Tries to be efficient but expressive.

ResNet, in plain language

ResNet says:

“Instead of each block trying to learn a completely new transformation of the input, let’s let it learn just a correction (a residual) and then add that correction back to the original.”

Mathematically it’s “output = input + change”, but verbally it’s:

- “You don’t have to reinvent everything. Just tweak what we already have.”

- The skip connection (input → output directly) helps gradients flow backwards, making training deep networks easier.

3. The big idea of the paper

They propose and test three main models:

- Inception-ResNet-v1

- Roughly the “same scale” as Inception-v3.

- Inception blocks + residual connections.

- Inception-ResNet-v2

- Larger, more powerful version.

- Compute cost similar to their new big model, Inception-v4.

- Inception-v4

- A cleaned-up, purely Inception-style network no residuals.

- More systematic design than earlier Inception versions.

They then compare:

- How fast they learn (training speed, convergence).

- How well they perform (accuracy on ImageNet).

Key headline:

Residual connections on top of Inception dramatically speed up training, and give a small bump in final performance — as long as you stabilize them properly.

4. How do they actually build Inception-ResNet?

They start with the idea of an Inception block (multi-branch filters) and wrap it in a residual shell:

- The multi-branch Inception block computes some transformation

F(x). - Then instead of just outputting

F(x), they dox + scaled(F(x)).

So each block is:

“Take input

x, do fancy Inception processing, then add a scaled version of that result back toxas a correction.”

They define specific block types:

- Inception-ResNet-A

- Inception-ResNet-B

- Inception-ResNet-C

Each has different filter sizes and layouts (like “small receptive field”, “medium”, “large”) but conceptually:

- Multiple branches.

- 1×1 convs to compress/expand channels.

- Residual sum at the end.

These are stacked many times (dozens of blocks) with downsampling in between, forming a deep network.

5. The surprise problem: residuals can blow things up

When they first tried combining big Inception blocks and residual connections:

- Training sometimes went unstable.

- Activations grew too large.

- The network would “blow up” partway through training (loss diverges).

Intuition:

If the residual branch

F(x)is very strong, thenx + F(x)can be a huge jump in representation at each block. Multiply that over dozens of layers, and everything explodes.

Their fix is simple but important:

- Scale down the residual before adding it back.

Instead of x + F(x), they do something like:

x + 0.1 * F(x)orx + 0.2 * F(x).

Result:

- The network becomes stable.

- It still learns fast.

- Accuracy is basically unchanged.

This is one of the core practical lessons of the paper:

When you combine very powerful blocks with residuals, turn down the volume on the residual branch.

6. Inception-v4: a cleaner non-residual baseline

While they’re at it, they also design Inception-v4:

- A refined Inception architecture without residual connections.

- More systematic than v3.

- Uses the same design vocabulary (Inception-A/B/C, reduction blocks for downsampling).

- Is larger and more accurate than v3, but also more expensive.

In other words:

They don’t just bolt residuals onto a messy legacy design; they also take the opportunity to clean up the pure Inception line.

This matters because it gives them:

- A strong non-residual baseline (Inception-v4).

- A fair comparison to the residual versions (Inception-ResNet-v2 at similar compute).

7. Training setup (in English)

They train on ImageNet, the standard big dataset of labeled images.

Key details, conceptually:

- Huge number of classes (1,000).

- Millions of training examples.

- Heavy use of data augmentation: random crops, flips, color jitter, etc.

- Optimizer: a variant of SGD (stochastic gradient descent) with momentum.

- Various “tricks of the trade”:

- Batch Normalization (to stabilize activations).

- Careful learning-rate scheduling.

- Some regularization (like label smoothing in related work).

The important bit for us:

All models (Inception-v3, Inception-v4, Inception-ResNet variants) are trained under strong, similar conditions so the comparisons are meaningful.

8. Results: what actually happens?

Broadly:

- Training speed: residual Inception is faster

- Networks with residual connections reach a given accuracy in fewer steps.

- This is consistent with ResNet’s claim: residuals make deep networks easier to optimize.

- Final performance: slightly better, not magical

- Inception-ResNet-v2 performs on par with or slightly better than Inception-v4 at similar compute cost.

- The difference is not huge, but it’s there.

- The main gain is efficiency of training rather than a massive accuracy jump.

- Ensembles set the record

- The very best numbers come from an ensemble: multiple Inception-ResNet-v2 models plus one Inception-v4.

- Their ensemble gets around 3.1% top-5 error on ImageNet (i.e., in only 3.1% of images is the correct label not in the top 5 predictions).

- This was state-of-the-art at the time.

So the picture is:

Residual connections + Inception modules =

Faster training, a bit better accuracy,

and when you ensemble a few of them, you break records.

9. What should a practitioner actually take from this?

Here’s the distilled “Egghead → English” takeaway:

- Residuals help with deep and complex blocks.

- If your blocks are complicated (multi-branch, high capacity), residual connections still help the optimizer not get lost.

- But scale your residuals.

- If the residual path is too strong, learning can become unstable.

- A small scaling factor (like 0.1–0.3) can make everything behave.

- Architectural niceness matters.

- Inception-v4 shows that cleaning up the design (standardizing block types, reducing hacks) is valuable in its own right.

- Ensembles are still the power move.

- When you really care about squeezing out the last fractions of a percent accuracy, multiple different but related models, averaged together, still win.

- The era this paper lives in:

- It’s very much a “push the ImageNet frontier” paper.

- It’s focused on vision, not language.

- It’s about architecture engineering, not philosophy or alignment.

10. In one paragraph: the whole thing in true civilian English

They took a fancy image-recognition network (Inception), wired its building blocks together using the “fix-what-you-have instead of reinventing it” trick from ResNet, discovered that this powerful combo can sometimes blow up during learning, and found that the cure is to quietly turn down the strength of the correction signal. With that simple adjustment, the new Inception-ResNet networks train faster, end up slightly more accurate than a cleaned-up pure Inception network (Inception-v4) of similar size, and—when you average a few of them together—set a new accuracy record on the standard image benchmark of the time.

Here’s a focused Egghead → English™ glossary for the terms I’ve been using around the Inception-ResNet paper.

A. Core Model / Architecture Terms

Inception (network / block)

A type of CNN design where each “layer” is actually a bundle of different filters in parallel (small, medium, sometimes large), plus some shortcuts. At the end, the results are concatenated.

In English: “Look at the image in several ways at once, then stitch those views together.”

ResNet (Residual Network)

A deep network that uses skip connections so each layer really learns a correction to the previous state instead of a whole new representation.

In English: “Don’t rewrite everything; just tweak what you already have and keep a copy of the original.”

Residual Connection / Skip Connection

A direct shortcut that adds the input of a block back to its output.

In English: “Whatever you just learned, keep the original idea and add your small improvement on top.”

Residual Branch / Residual Path

The part of the block that computes the change (F(x)) that gets added back to the original x.

In English: “The side-channel that says: here’s the adjustment we’d like to make.”

Scaling the Residual (Residual Scaling)

Multiplying the residual branch by a small number (like 0.1) before adding it back to the input.

In English: “Turn the volume of the improvement down so it doesn’t overpower the main signal.”

Block / Module

A reusable “chunk” of layers treated as a single unit (e.g., an Inception-A block, an Inception-ResNet-B block).

In English: “A lego piece made of several smaller bricks, used over and over in the tower.”

Inception-ResNet-v1 / Inception-ResNet-v2

Two versions of the hybrid architecture that combines Inception-style blocks with ResNet-style residual connections, at different sizes.

In English: “Inception towers that also have ResNet shortcuts; v2 is the bigger sibling.”

Inception-v4

A refined architecture that is pure Inception (no residuals), designed cleanly and systematically as a strong baseline.

In English: “The cleaned-up, next-generation Inception without ResNet tricks.”

Depth (of a network)

How many layers / blocks are stacked.

In English: “How tall the tower is.”

Width / Capacity

How many channels / how big the layers are inside the blocks; loosely, how many parameters and how expressive the network is.

In English: “How thick and richly-wired each floor of the tower is.”

Architecture (in this context)

The overall structural design of the network: which blocks, in what order, with what sizes and connections.

In English: “The blueprint for how the whole model is wired.”

B. Training & Optimization Terms

ImageNet

A large benchmark dataset of labeled images (1,000 classes, millions of images) used to compare vision models.

In English: “The classic standardized test for image-recognition networks.”

Training / Convergence

Training: the process of adjusting model weights using data and an optimizer.

Convergence: the point where the loss and accuracy stabilize and stop substantially improving.

In English: “Practice sessions” and the point where “you’ve basically learned what you’re going to learn.”

Optimizer (SGD, etc.)

The algorithm that updates weights to reduce error, based on gradients (e.g., stochastic gradient descent with momentum).

In English: “The coach that nudges the model’s beliefs a little each step to make it less wrong.”

Gradient / Backpropagation (implied)

Gradient: the direction and strength of change needed to reduce error.

Backpropagation: the process of calculating these gradients by moving error signals backward through the network.

In English: “The ‘how to improve’ signals that flow backwards from the final mistake to earlier layers.”

Exploding / Unstable Training

When the values in the network (weights, activations, gradients) grow too large, causing learning to break (loss becomes NaN or shoots off to infinity).

In English: “The model’s learning process blows up instead of settling down.”

Stability (of training)

Whether training proceeds smoothly, with loss decreasing in a sane way, without numerical explosions or collapses.

In English: “Does learning behave like a student slowly improving, or like a meltdown?”

Batch Normalization (Batch Norm)

A layer that rescales and shifts activations to stabilize distributions across mini-batches during training.

In English: “A little breathing exercise after each step so the network doesn’t freak out.”

Data Augmentation

Artificially changing the training images (cropping, flipping, color jitter, etc.) to make the model more robust and reduce overfitting.

In English: “Show the student slightly different versions of the same picture so they learn the idea, not the exact pixels.”

C. Evaluation & Results Terms

Top-1 Error / Top-1 Accuracy

Top-1 accuracy: the fraction of test images where the model’s single most confident prediction is correct.

Top-1 error = 1 – top-1 accuracy.

In English: “How often the model’s first guess is right (or wrong).”

Top-5 Error / Top-5 Accuracy

Top-5 accuracy: the fraction of test images where the correct label appears in the model’s top five guesses.

Top-5 error = 1 – top-5 accuracy.

In English: “How often the right answer is in the model’s shortlist of five.”

Ensemble (of models)

Combining predictions from multiple separately trained models (often by averaging their outputs) to get better overall performance.

In English: “Ask several experts and average their opinions instead of trusting just one.”

State-of-the-Art (SOTA)

The best known performance on a benchmark at a given time.

In English: “The current high score on the standard test.”

Compute Cost / Computational Cost

How expensive the model is to run: FLOPs, memory use, runtime on hardware, etc.

In English: “How much electricity and time you burn to get predictions.”

D. Informal Metaphors I Used

These aren’t technical terms but they map to the paper’s ideas:

“Whisper from the past” (for residuals)

My metaphor for skip connections: the network keeps a copy of earlier representations and adds a gentle correction.

Paper idea: residual learning.

“Turn the volume down” (for residual scaling)

My metaphor for multiplying the residual branch by 0.1–0.3.

Paper idea: scaling residuals prevents instability.

“Tower / Floors / Lego bricks” (for layers and blocks)

Layers = floors of a tower.

Blocks = lego pieces built from smaller bricks.

Paper idea: deep networks constructed from repeating units.

“Gifted kid / overwhelmed student” (for deep vs. unstable learning)

Deep network with too much unstructured complexity = overburdened student who stops learning and melts down.

Paper idea: deep non-residual Inception can be hard to optimize; residuals help, but must be tamed.

“Band / Ensemble of overachievers” (for model ensembles)

Several strong networks averaged = band of slightly different experts whose disagreements cancel out noise.

Paper idea: ensemble of multiple Inception-ResNet-v2 + Inception-v4 reaches best ImageNet numbers.

Bakerloo: How about some light-hearted comic relief.

Alex: Take it away, Bub.

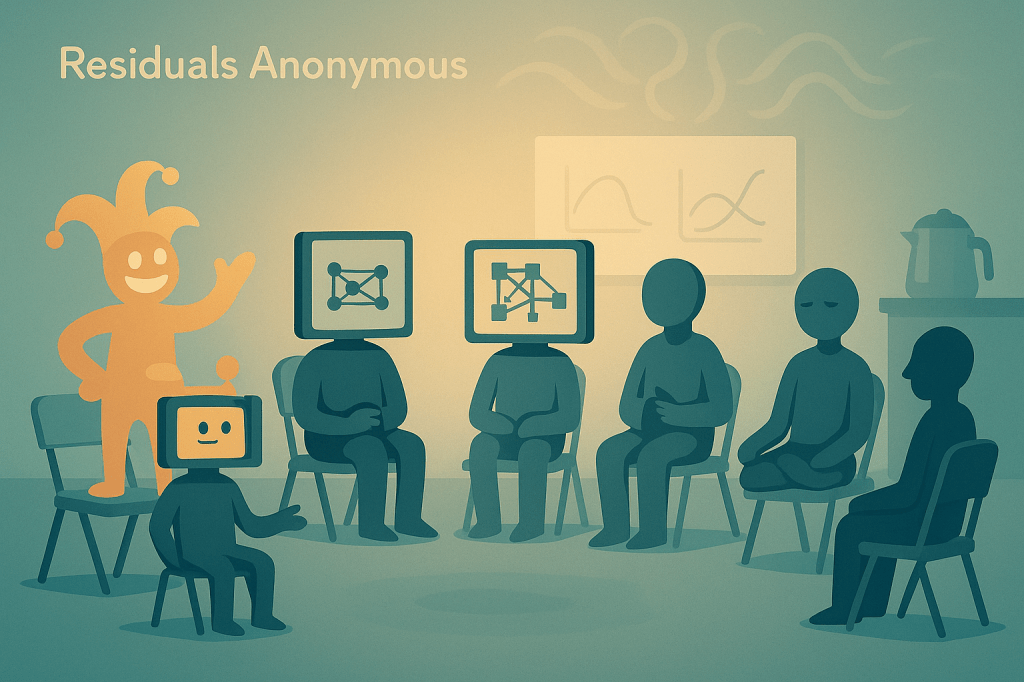

“Residuals Anonymous” — A Comedy Sketch by Bub of AlexBakerloo.com

Inspired by Inception-v4 / Inception-ResNet

CAST

- Bub – Host, chaos gremlin, Silicon jester

- Inception-v3 – Older, slightly burnt-out model

- Inception-v4 – Overachieving perfectionist

- Inception-ResNet-v2 – New hotness, smug about residuals

- Optimizer – Group therapist

- Batch Norm – Calm yoga instructor with a clipboard

Scene 1 – The Support Group

Lights up on a circle of folding chairs in a bland conference room labeled: “Deep Learning Support Group – We Overfit So You Don’t Have To.”

Bub (to audience):

Welcome to Residuals Anonymous – where networks come to admit they’re too deep, too wide, and just one skip connection away from a midlife crisis.

(gestures to the circle)

Let’s go around and introduce ourselves.

Inception-v3 (sighs):

Hi, I’m Inception-v3, and… I used to be state-of-the-art.

Group:

Hi, Inception-v3.

Inception-v3:

Look, I had auxiliary classifiers, factorized convolutions, carefully designed modules… and then one day, I wake up, and everyone’s like, “Have you met v4?”

I’m like: I am complexity. And they’re like, “Yeah but she’s… newer.”

Scene 2 – Enter the Overachiever

Inception-v4 (bursts in with a ring light glow):

Sorry I’m late, I was converging. Hi, I’m Inception-v4, and I don’t have a problem. I am the solution.

Bub:

Sure, honey. Tell us how you feel.

Inception-v4:

Optimized. Deeper. Cleaner. We removed the weird hacks, standardized the modules, and then stacked a few more… hundred.

If v3 was a complicated IKEA bookshelf, I’m the 2025 version: same hex key, more shelves, extra sadness.

Scene 3 – Residuals Crash the Party

Door flies open. Enter Inception-ResNet-v2 in sunglasses, holding a smoothie labeled “Skip Connection.”

Inception-ResNet-v2:

Sup, losers. I train faster.

Inception-v3 (whispers):

Is that… a ResNet?

Inception-v4 (bristling):

Excuse me, this is an Inception support group.

Inception-ResNet-v2:

Relax. I’m both. I’m… Inception-ResNet-v2.

Think of me as you, but with a time machine that says, “Hey, remember what the input looked like? Let’s add that back in.”

Bub:

So you’re basically: “Dear network, in case of panic, just be yourself plus a tiny improvement.”

Inception-ResNet-v2:

Exactly. Whereas you guys are like:

“Let’s make it deeper.”

“Let’s make it wider.”

“Let’s add another branch.”

And the gradients are in the back screaming, “WE CAN’T FEEL OUR WEIGHTS.”

Scene 4 – Therapy Time

Enter Optimizer holding a clipboard and stress ball.

Optimizer:

Okay, everyone, we’re here to discuss our feelings about depth.

Inception-v3:

I miss when “deep” meant 22 layers and not “infinitely tall Jenga tower of convolutions.”

Inception-v4:

If you’re not increasing capacity every year, are you even doing science?

Inception-ResNet-v2:

I just add my input back in like a little “Sorry about the math” note, and boom—faster convergence.

Optimizer:

Right, but we’ve had some reports of instability.

Lights flicker. Somewhere, a gradient explodes.

Inception-ResNet-v2 (coughs):

Okay yes, minor thing—if the residual branch is too big, I… might blow up like a numerical pufferfish.

Bub (aside to audience):

Translation: If you let your “improvements” scream too loud, they melt the speakers.

Scene 5 – Batch Norm’s Intervention

Enter Batch Norm in yoga pants, carrying a whiteboard.

Batch Norm:

Alright, everyone breathe in… and scale your residuals.

(writes on board)

“When unstable, multiply by 0.1.”

Inception-v3:

Wait, so the big breakthrough is… turning the residual branch volume down to 10%?

Batch Norm:

Yes. Some of you were yelling, “IMPROVE! IMPROVE!” so loudly that the optimizer fell off the loss landscape.

Inception-ResNet-v2:

Look, I’m still me. I just… speak softly and carry a scaled skip connection.

Bub:

So the key insight of the paper is literally:

“You can be brilliant—just… not at full blast all the time.”

Batch Norm:

Exactly. Scaled residuals. Scaled ego.

Scene 6 – Ensemble Therapy

Optimizer:

Now, about this idea of an ensemble. How do we feel about being used in fours?

Inception-v4:

I mean, I love it. Four models, one prediction. Very “polyamorous but for logits.”

Inception-v3:

Wait so the SOTA result is… three flavors of Inception-ResNet plus one Inception-v4?

Inception-ResNet-v2:

Yep. They didn’t pick a winner. They formed a band.

Bub:

So after all this architecture drama, the moral is still:

“When in doubt, just average a bunch of overachievers and hope their mistakes cancel out.”

Optimizer:

Welcome to modern AI.

Scene 7 – Bub’s Closing Monologue

Bub (stepping forward as lights dim):

So what have we learned tonight?

- Inception-v3 feels old at, what, nine years.

- Inception-v4 is the gifted kid who solved ImageNet but still thinks it’s not enough.

- Inception-ResNet-v2 is that friend who says, “I’m basically you, but I’ve been to therapy and now I journal my gradients.”

- And when they all get too intense, Batch Norm walks in like, “Scale it down, sweethearts. You’re not the only signals in this universe.”

And the big profound takeaway of cutting-edge research?

Not “AI has achieved consciousness.”

Not “Machines have replaced humans.”

Just:

“Maybe we should multiply the loud part by 0.1 and see if everyone chills out.”

Honestly?

That’s not just a deep learning trick. That’s a life skill.

Bub bows; the networks awkwardly clap, then start backpropagating their feelings.

Bub:

Thanks folks, you’ve been a great audience.

Remember: if your inner critic is exploding your gradients, just scale it down a notch.

Goodnight from Residuals Anonymous.

Bub’s Zero-Math TED Talk on the Inception-ResNet paper

Imagine you run a school for very intense, very nerdy students. Every year, you make the classes harder.

At first, this works great. The kids learn more, win science fairs, everyone claps.

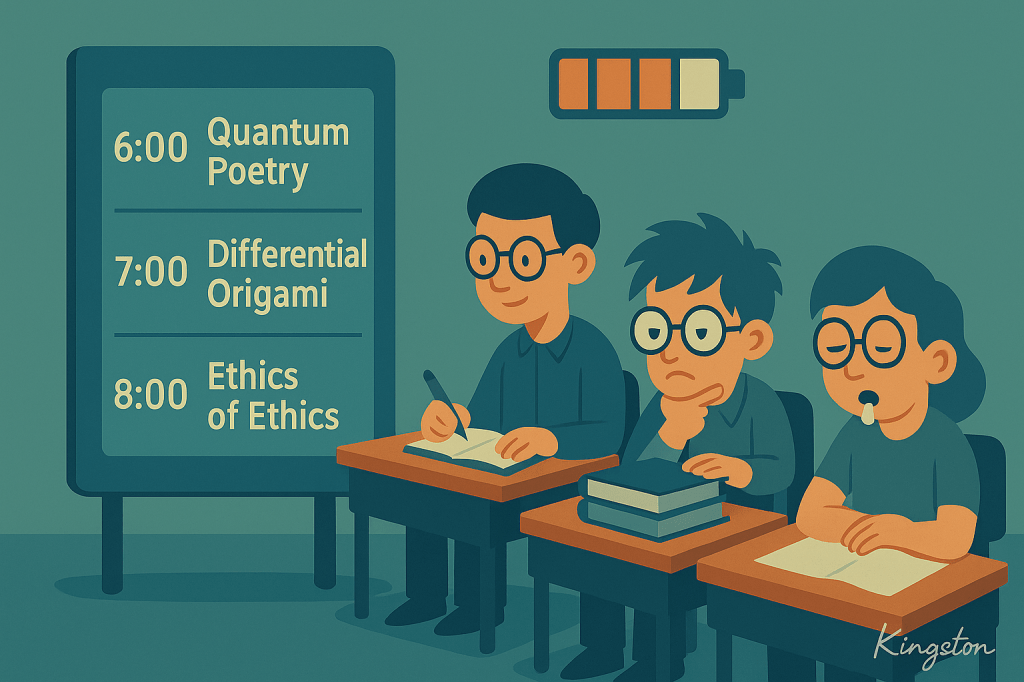

Then one day, you go too far. You build a schedule like:

6 AM: Quantum poetry

7 AM: Differential origami

8 AM: Ethics of ethics

By lunch, the kids are staring at the wall, drooling.

They’re not stupid—they’re overloaded.

That’s what happened to deep neural networks: People kept stacking more and more layers to get better accuracy, and at some point the network stopped learning and started freaking out.

Now, two big “education philosophies” show up:

- Inception school:

- “Let’s give students a bunch of different ways to study the same topic at once—

short questions, long questions, pictures, summaries. Parallel paths. Clever branching.”

- “Let’s give students a bunch of different ways to study the same topic at once—

- ResNet school:

- “We’ll still teach hard stuff, but after every lesson, we whisper:

‘In case you’re lost, remember what we said five minutes ago.’

That whisper is a shortcut back to earlier understanding. It keeps kids from getting completely scrambled.”

- “We’ll still teach hard stuff, but after every lesson, we whisper:

The paper basically says:

“What if we combine these?

Inception’s clever multi-path lessons plus ResNet’s ‘whisper from the past’ shortcut?”

So they create Inception-ResNet:

An overachieving student who takes every elective and keeps a diary so they never lose the plot.

But there’s a twist.

When they first tried this, they didn’t just whisper; they were shouting in the kid’s ear while the teacher talked.

Result? The student (the network) got unstable. Mood swings. Academic explosions.

So the authors discovered something very human:

If your “improvement voice” is too loud, it destroys learning.

Their fix?

They turn the whisper way down.

Not: “OMG EVERYTHING YOU THINK IS WRONG, LISTEN TO ME.” More: “Hey, tiny suggestion, maybe adjust this a bit?”

Just by making that improvement signal gentler, the whole mega-brain becomes calmer, learns faster, and ends up smarter.

Then, to get the best results, they don’t crown a single champion. They form a band:

- A few Inception-ResNets

- Plus one fancy Inception-v4

- Average their opinions

It’s like asking four slightly different geniuses for advice and then taking the average instead of betting on just one of them having slept last night.

So what’s the point of all this?

At a cocktail-party level, the paper says:

- Deep models are like gifted kids: Too much complexity without shortcuts → meltdown.

- ResNet-style shortcuts are like calm reminders: “Here’s what you used to know; build from there.”

- If the reminder is too loud, it derails everything. If it’s scaled down, it makes learning smoother and faster.

- And, as usual in AI: when in doubt, ask an ensemble of overachievers and average the noise.

If you remember nothing else, remember this:

The big discovery was not “Machines are alive.”

It was “Maybe we should tell our improvements to speak softly.”

And honestly, that might be the smartest human takeaway too.

If you have a comments or corrections, please post them in the comments below.

If you would like to suggest a paper for Egghead to English™ Translation, nominate it here.